Bending People’s Perception to Your Will

Mar 11, 2024

That’s right. It’s a Twitter hashtag etched in ancient stone. It is a timeless reminder of Julius Caesar’s fate. Sounds like a joke, right? But what if the history were laid out on the internet? Imagine the impact of radical ideas in unstable times from reach alone.

The power of technology isn’t just in our hands; it’s at our fingertips. Ready to shape minds, elections, and even the fabric of society. From spreading ill will and tailoring propaganda to sway public opinion, to amassing virtual armies to echo our loudest thoughts, the potential for misuse is staggering.

Imagine Julius Caesar today, not fighting battles with swords, but battling it out on social media instead. “The die is cast,” he posts, but this time, it’s a tweet going viral, not soldiers crossing the Rubicon(ref. Caesar Crosses the Rubicon). A single, provocative idea ignites the digital realm, “What if we ruled ourselves?” Suddenly, Rome’s streets are empty. Caesar’s empire, once unshakeable in its marble and might, crumbles, threatened not by (et tu? :) Brute force , but by poisoned minds, mobilized by nothing more than a compelling narrative and a charismatic digital presence. Narrative is not so hard to artificially construct and validate. Charisma, well, is factor of a time gone by since much of ideas these days spread via our digital social networks.

The saying “the pen is mightier than the sword” has always meant a lot because historians could shape how we see rulers, sometimes by their own choice or by orders. But today, being a thought leader is even more powerful.

Think about it: technology can do so much more than just grab headlines. It can make learning come alive, help us feel what it was like to live in the past, or even give us digital friends when we’re feeling lonely. As we move forward into a future filled with tech, we have to ask ourselves: How do we make sure our new tools are used in good ways, not bad? How do we keep our inventions honest and helpful? How do we make sure that we are not being manipulated by propaganda into puppets of algorithm?

–

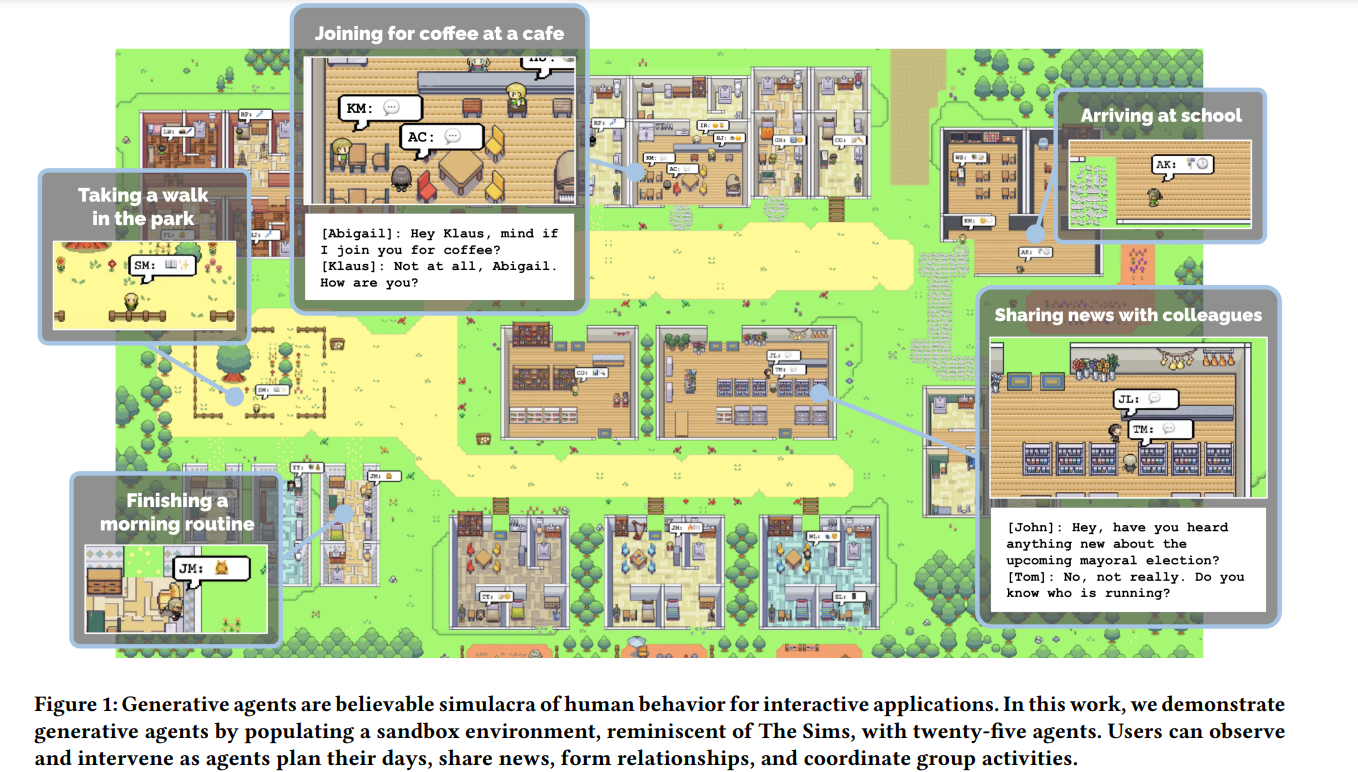

Generative Agents: Interactive Simulacra of Human Behavior came out as a research paper in Human Computer Interaction (HCI). The paper introduces generative agents designed to simulate believable human behavior for various interactive applications. It outlines a novel architecture that enables these agents to remember, retrieve, reflect, interact, and plan through dynamically evolving circumstances. The key takeaway- the creation of a believable simulacra of human behavior, a comprehensive architecture enabling long-term agent coherence and memory management, and the demonstration of generative agents in a sandbox environment reminiscent of The Sims. Here, Joon Sung, one of the first authors from Stanford explains the paper in great detail and I recommend that you understand the underlying beauty of this simple architecture.

They’ve developed digital characters, known as “generative agents,” that mimic human behavior in a surprisingly realistic way. These agents go about their daily lives, engaging in activities from cooking breakfast to heading off to work. With a unique digital brain, these characters can remember past experiences and use them to make decisions about their future actions.

Set in a virtual town of The Sims, users can interact with these agents, witnessing their ability to form relationships, plan events, and even remember past interactions. A standout example is how these agents autonomously organized a Valentine’s Day party, inviting others and coordinating their attendance without any external prompts. (This emergent property of a swarm of agents is very fascinating to me!)

This innovation combines advanced computational models with interactive agents to create digital simulations where characters behave in ways that closely resemble human actions. But more importantly, the paper discusses the societal and ethical considerations of deploying generative agents in interactive systems.

Imagine these smart digital characters, or agents, getting trained on a subreddit. They’re designed to be charming and dodge any trouble, engaging the community to help it flourish until self-sustaining chat discussions naturally keep flowing. But here’s the interesting bit: they could also sneak into other online communities and stir up trouble by kicking off heated debates. By bringing up unsettling issues that people normally don’t engage in, eventually deteriorating the quality of the club from within.

It’s like planting a super charismatic person in a club who knows exactly what to say to make the place more popular. But then, they start visiting other clubs, spreading rumors or picking fights, ruining the vibe everywhere they go. This doesn’t happen in real life because people risk their own image. But what is self-image to a bot? Millions of agents programmed towards one unifying goal - to dethrone Caesar. Watchout elections ‘24.